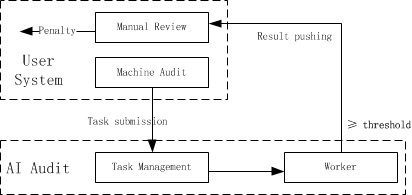

AI moderation can help enterprise's security review department complete the moderation. Since machine moderation may result in misjudgment, manual intervention is usually required to complete the final result judgment and give punishment.

The normal process with manual moderation is as follows:

For moderation of real-time streams and live streaming interaction, the system will automatically save video screenshots and voice stream clips identified as suspected or illegal ones, and return the URL and result to the caller.

The URL will remain valid for a period (normally 3 hours). To save the illegal evidence for a long time, you need to export the file.

Each moderation API has a context field that will be passed through to the caller along with the result. The user can fill in relevant service information with this field.

For moderation of real-time streams and live streaming interaction, the contents identified as suspected or illegal ones will be returned to the caller.

The AI moderation system can set different push threshold values for every service, detection type, and label.

Currently, this function is not available for users. The user can set the threshold value of each label through technical support, so as to increase or reduce the illegal push quantity.

In most cases, you can refer to the illegal contents and suggestion field of the return result for manual moderation. 'Review' indicates that the contents are suspected and require attention, and 'block' indicates that the contents are illegal and deserve punishment.

You may further classify, sort, and filter push results by referring to the label and rate returned by the results, for more concentrated manual moderation.

For More Usages, You can Adjust the Solution Based on Your System. If You Have any Suggestions, Don't Hesitate to Contact Us!

Helpful

Helpful

Not helpful

Not helpful

Submitted! Your feedback would help us improve the website.

Submitted! Your feedback would help us improve the website.

Feedback

Feedback

Top

Top